Virtual Faces

The face is one of the most crucial aspects of virtual characters, since humans are extremely sensitive to artifacts in either the geometry, the textures, or the animation of digital faces. This makes capturing and animation of human faces a highly challenging topic.

Analyzing the so-called Uncanny Valley effect for the perception of virtual faces helps us understand what the important properties and features of virtual faces are.

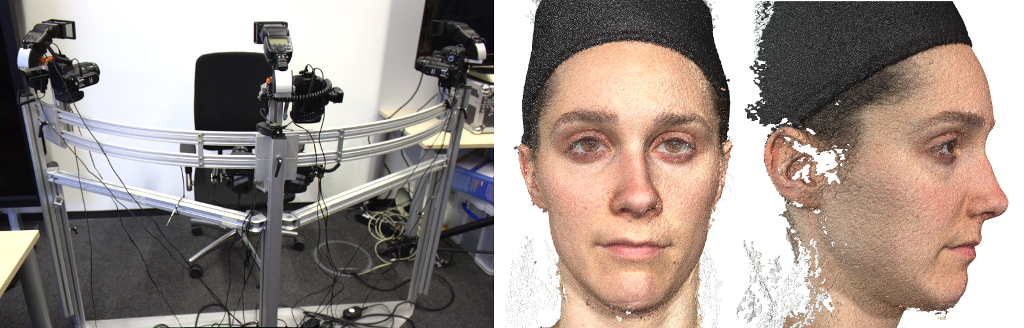

We generate realistic face models by 3D-scanning real persons, using a custom-built multi-view face scanner, which reconstructs a high resolution point clouds from eight synchronized photographs of a person’s face. Figure 1 shows both the face scanner and the resulting point cloud.

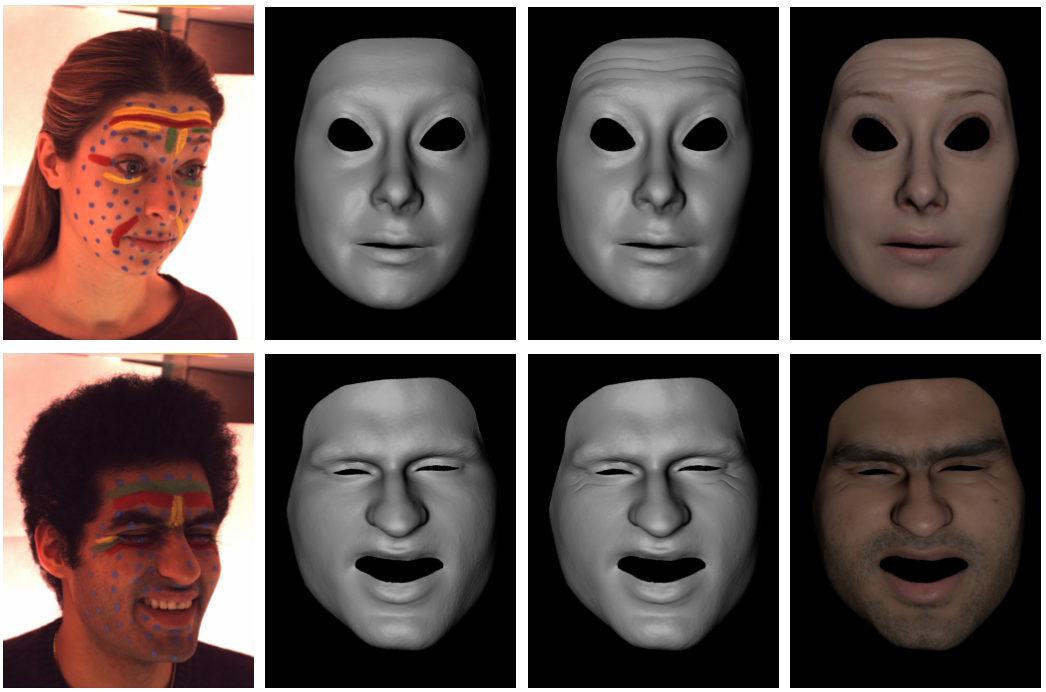

In order to cope with scanner noise and missing data, we fit a morphable template model (the Face Warehouse model) to the point data. To this end we first adjust position, orientation, and PCA parameters, and then employ an anisotropic shell deformation to accurately deform the template model to the scanner data (Achenbach et al., 2015). Reconstructing the texture from the photographs and adding eyes and hair finally leads to a high-quality virtual face model, as shown in Figure 2.

When it comes to face animation, most people employ blend shape models, which however require the cumbersome modeling or scanning of many facial expressions. In a past project (Bickel et al., 2007) we tried to achieve realistic face animations from just a single high-resolution face scan. We tracked a facial performance using a facial motion-capture system, which was augmented by two synchronized video cameras for tracking expression wrinkles. Based on the MoCap markers we deform the face scan using a fast linear shell model, which results in a large-scale deformation without fine-scale details. The fine-scale wrinkles were tracked in the video images, and then added to the face mesh using a nonlinear shell model. While this method resulted in high quality face animations including expression wrinkles (Figure 3), the method was computationally very involved: For each frame of an animation, computing the deformed facial geometry took about 20 min, and a high quality skin rendering took another 20 min.

In a follow-up project (Bickel et al., 2008) we aimed at real-time animations of detailed facial expressions. The large-scale deformation is again computed using a linear deformation model, which we accelerated through precomputed basis functions. Fine-scale facial details are incorporated using a novel pose-space deformation technique, which learns the correspondence of sparse measurements of skin strain to wrinkle formation from a small set of example poses. Both the large-scale and fine-scale deformation components are computed on the graphics processor (GPU), taking only about 30ms per frame. The skin rendering, including subsurface scattering, is also implemented on the GPU, such that the whole system provides a performance of about 15 frames/sec (Figure 4).

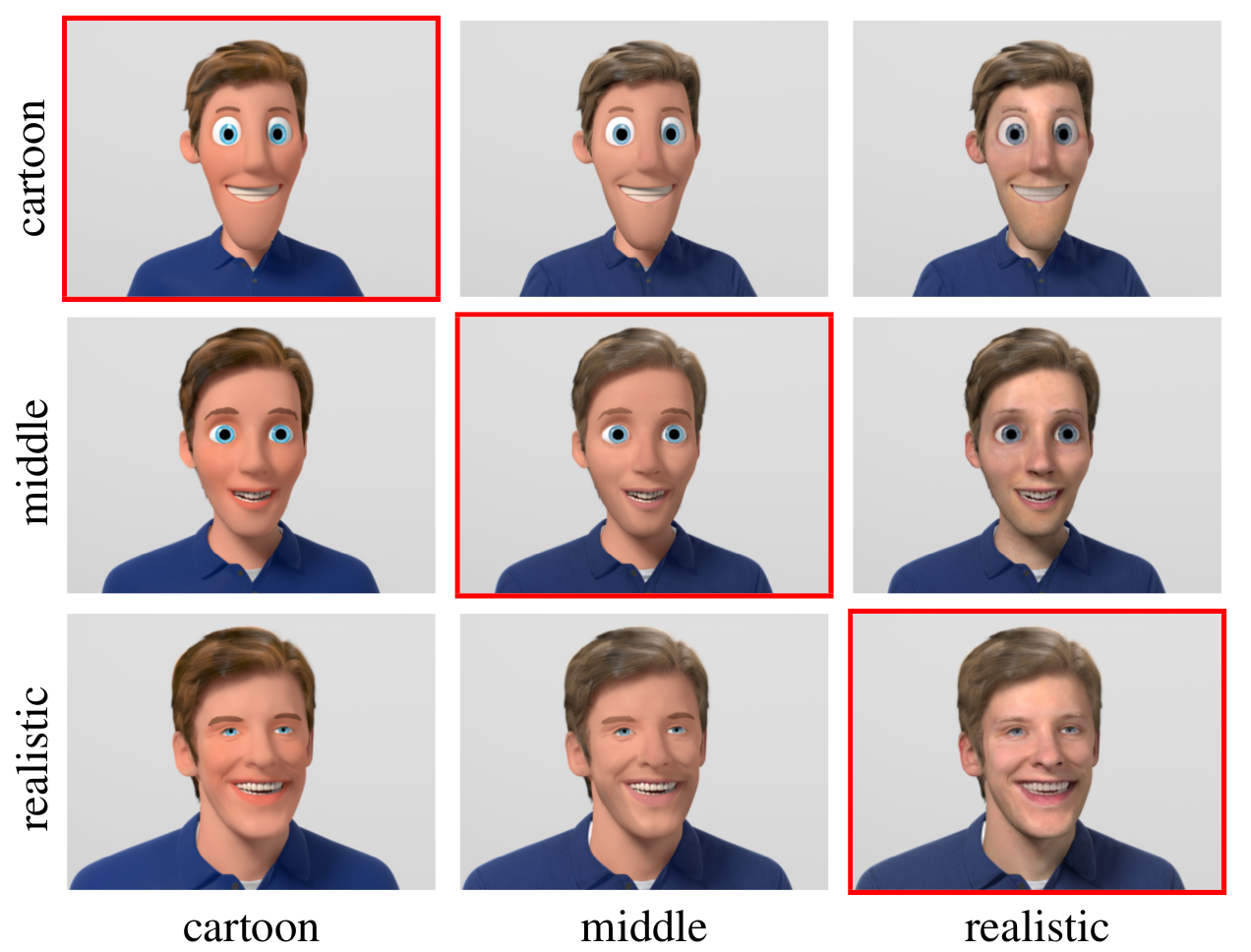

In many cases virtual characters do not have to look as realistic as possible, but can also be modelled in a more stylized or cartoony manner. In fact, artists often rely on stylization to increase appeal or expressivity, by exaggerating or softening specific facial features. In (Zell et al., 2015) we analyzed two of the most influential factors that define how a character looks: shape and material. With the help of artists, we designed a set of carefully crafted stimuli consisting of different stylization levels for both parameters (Figure 5), and analyzed how different combinations affect the perceived realism, appeal, eeriness, and familiarity of the characters. Moreover, we additionally investigated how this affects the perceived intensity of different facial expressions (sadness, anger, happiness, and surprise). Our experiments revealed that shape is the dominant factor when rating realism and expression intensity, while material is the key component for appeal. Furthermore our results show that realism alone is a bad predictor for appeal, eeriness, or attractiveness. An EEG study on how stylized faces are perceived by humans can be found in (Schindler et al., 2017). Animating a stylized face through motion capturing of real humans is challenging due to the strong differing ranges of motion, which we addressed in (Ribera et al., 2017).

Related Publications

- To stylize or not to stylize? the effect of shape and material stylization on the perception of computer-generated facesACM Transaction on Graphics 34(6), (Proc. SIGGRAPH Asia), 2015