Real-Time Hand Tracking

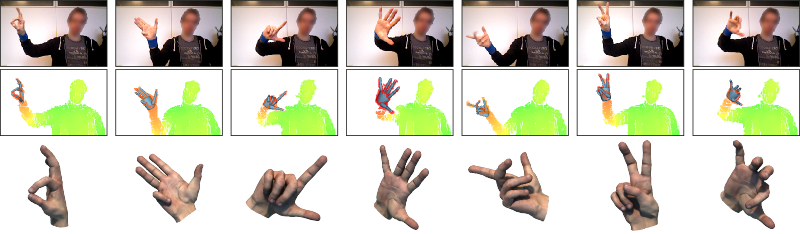

Recovering the full articulation of human hands from sensor data is a challenging problem due to the hand’s high number of degrees of freedom, the complexity of its motions, and artifacts in the input sensor data (Figure 1). We investigated different approaches for hand tracking and developed systems that are capable of real-time posture estimation and tracking of human hands based on RGBD input data.

Model-based approaches to hand tracking recover hand movements from RGBD sensors by fitting a virtual hand model to the sensor’s 3D point cloud data (Schröder et al., 2014; Tagliasacchi et al., 2015). Low-cost RBGD devices are easily deployable, but can exhibit strong sensor artifacts. This can lead to errors while computing correspondences between the model and the sensor data. To ensure plausible hand posture reconstructions despite such data flaws, the geometric registration process can be made robust by employing various regularization priors.

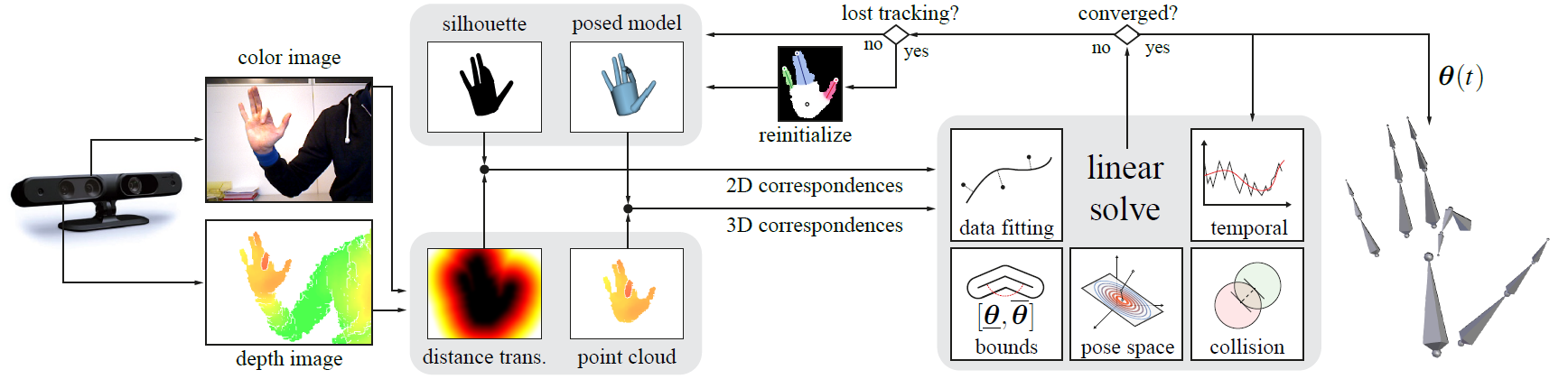

Using robust articulated-ICP (Tagliasacchi et al., 2015), hand tracking is formulated as a regularized articulated registration process, in which geometrical model fitting is combined with statistical, kinematic and temporal regularization priors. In this process, an energy encapsulating these combined quantities is minimized in a single linear solve (Figure 2). To account for occlusions and visibility constraints, a registration concept is employed that combines 2D and 3D alignment between the model and the data.

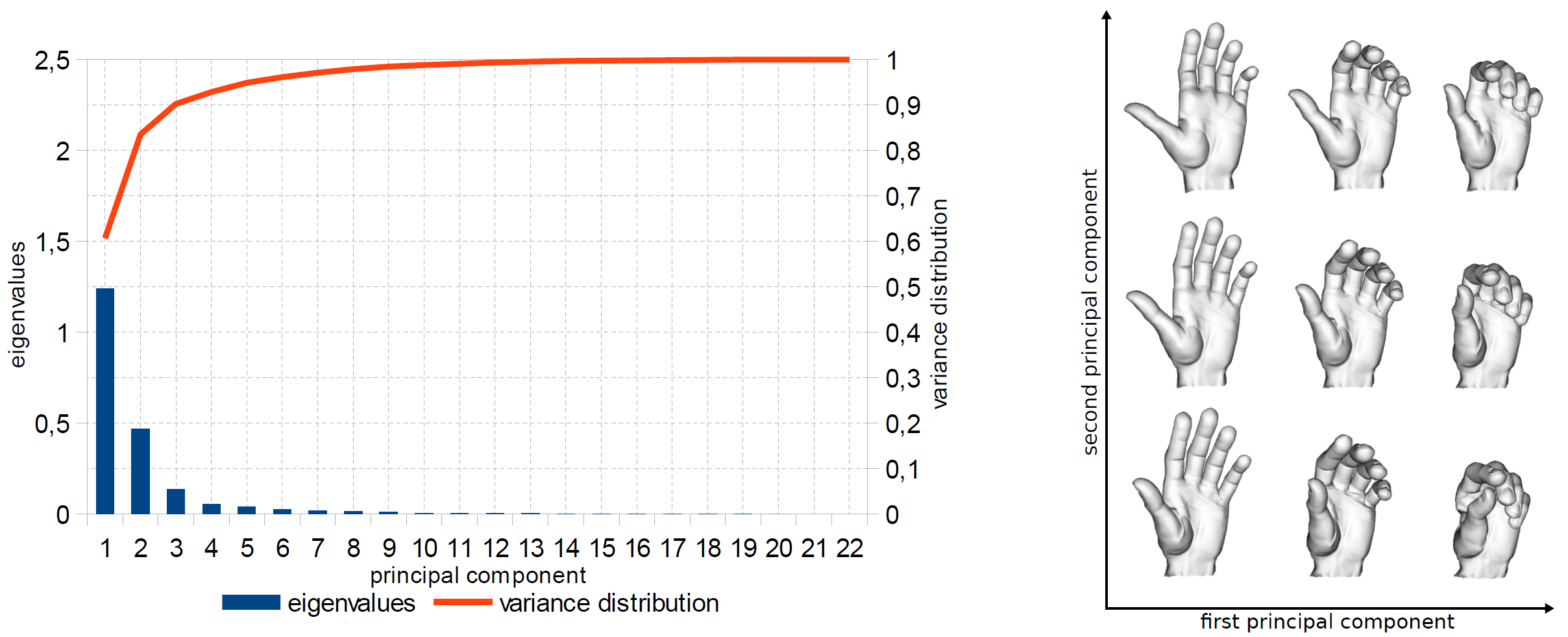

Beyond performing robust correspondence computations and enforcing kinematic constraints, like joint angle limits, temporal coherence and collision detection, statistical analysis of hand motions provides a valuable means of regularizing posture estimations. Performing PCA on a varied dataset of motion captured hand movements exposes the correlations and redundancies present within hand articulations (Schröder et al., 2013). This can be used to derive subspace representations of hand articulations (Figure 3), which can be used to improve the realism of the hand posture estimations in real-time systems when faced with imperfect sensor data. The loss of information and flexibility caused by PCA dimension reduction can be compensated for by using an adaptive PCA model (Schröder et al., 2014) that is adjusted during real-time tracking to account for observed hand articulations that are not covered by the initial hand posture parameter subspace.

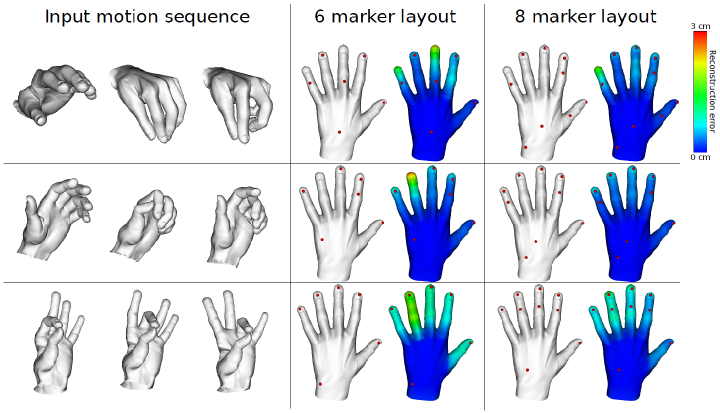

Subspace representations of hand articulations allow for the reconstruction of hand motions from sparse sensor data. Conversely, they can also be used to infer the minimal amount of input data necessary for robust hand posture reconstruction in the context of optical motion capture (Schröder et al., 2015). We addressed the problem of determining the optimal placement of a reduced number of markers in order to facilitate accurate posture reconstruction from sparse mocap marker data in a method that automatically generates functional layouts by optimizing for their numerical stability and geometric feasibility (Figure 4).

Related Publications

- Analysis of Hand Synergies for Inverse Kinematics Hand TrackingIEEE International Conference on Robotics and Automation, Workshop on Hand Synergies, 2013